Queen's AutoDrive

Project Overview

It's hard to summarize the profound effect Queen's AutoDrive has had on me as an individual. From data labeller to system integration lead, to technical lead, and finally to team captain, this team has defined my undergraduate career.

Queen's AutoDrive competes annually as part of the GM-SAE AutoDrive Challenge II international competition to develop an SAE-level 4 autonomous vehicle. Given a Chevrolet Bolt, and top-of-the-line equipment, we are left to our devices to make it happen. In Year III we had to start from scratch, not a single piece of hardware, line of code, or organizational structure was carried over. In the face of this, and an ... unfortunate integration with a table ... we came together as a 90-person unit to drive autonomously when it counted. Beating teams with three years of development, we were awarded $1,000 for placing 3rd in the Intersection Challenge, I hope the video below conveys our excitement to you!

Design & Implementation

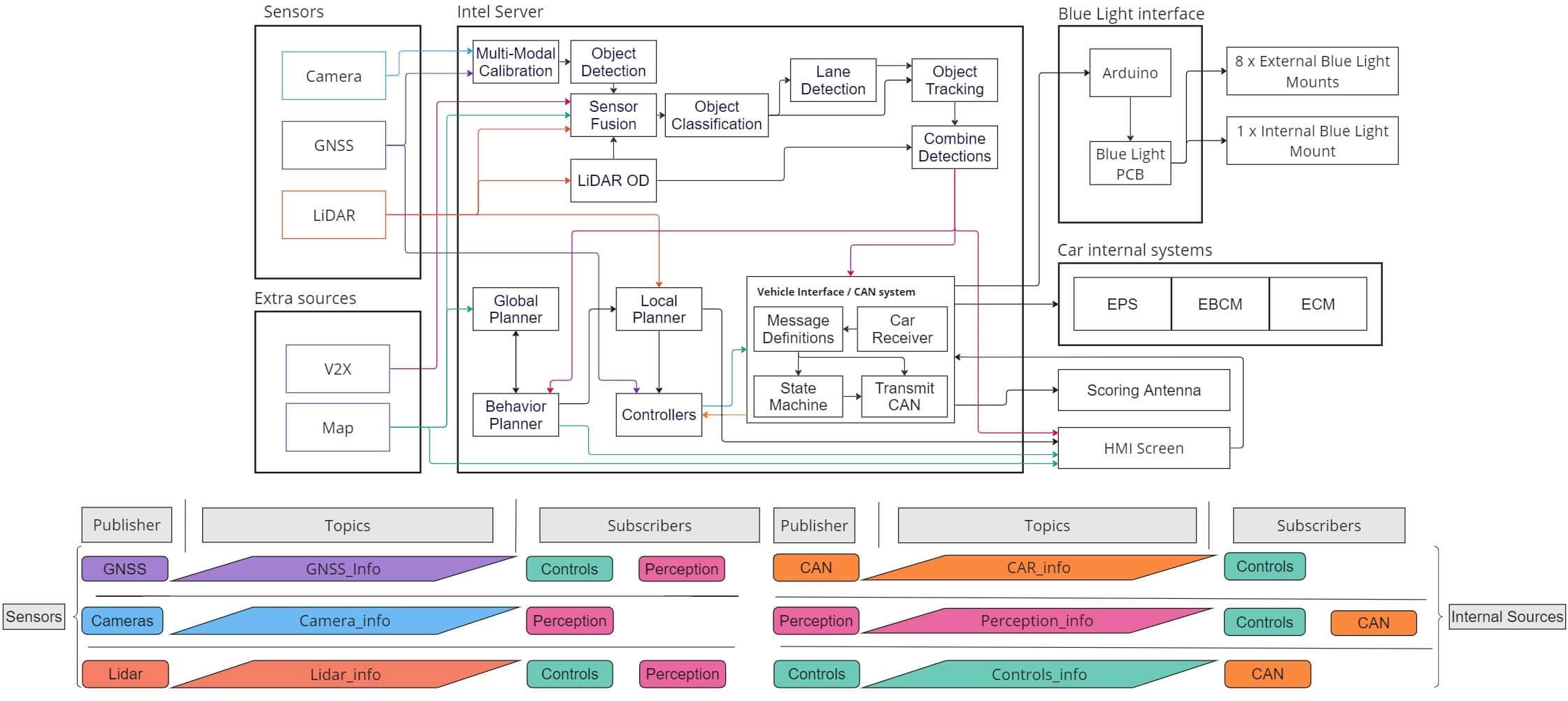

This project requires expertise from all facets of robotics; perception, control, planning, hardware design, vehicle interfacing, integration, and more.

What Did I Work On?

In addition to Project Management, system design, and administrative duties, I undertook numerous technical projects that were critical to the success of the team.

In Year IV my role was split into 5: Team Captain, Project Manager, Operations Manager, Global Planner Team Lead, and Sensor Fusion Team Lead. Currently, I act as a design consultant and a mentor to the team.

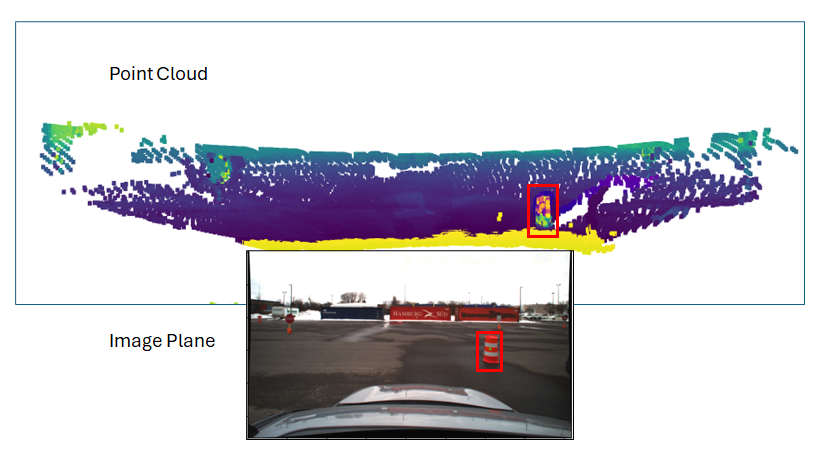

LiDAR-Camera Fusion

How can we tell the 3D position of detected objects with camera-based detections, and LiDAR data? I implemented a novel approach to this problem for a 3-LiDAR, 4-camera system with ±15 cm longitunal error at 40m range, at 90 Hz

Autonomous Vehicle Global Planner

Built on the Lanelet2 library, I developed a parser for the HD map, real-time localization within lane segments, shortest path routing, and ROS2 transmission of path information. Written in Python, with ROS2; this planner worked first try on the vehicle.

Presentations and Reports

As Team Captain, I was the principal author for:

Project Leadership Report (4th), Concept Design Report (4th), Software Requirements Specifications (5th).

I was also a principal speaker for:

Project Leadership Event (4th), Mobility Innovation (4th), Concept Design Event (2.5/100 behind 3rd).

Full Stack Integration

As the Technical Lead in Year II, I was responsible for the ROS2 integration of 11 single-points-of-failure across all systems with Python, ROS2.

From drivers, to raw data, to detections, to planner, to controllers, to CAN.

V2X Challenge

Placing first in the V2X Challenge Part I, I worked with a partner to parse and decode MAP and SPAT messages in Python, and transmit the data encoded as CAN messages per a custom .dbc

Autonomous Mode Warning Lights

Developed Autonomous Mode indicator via CAN with Arduino microcontroller. Based on AV readiness, and status of safety modes, controlled the blue light autonomous mode indicators.

Results & Analysis

- 3rd, Intersection Challenge

- 4th, Project Leadership

- 4th, Mathworks Simulation Challenge

- 4th, Mobility Innovation Challenge

- 1st, V2X Challenge Part I

- Concept Design Event, 2.5/100 behind 3rd