LiDAR-Camera Fusion

Project Overview

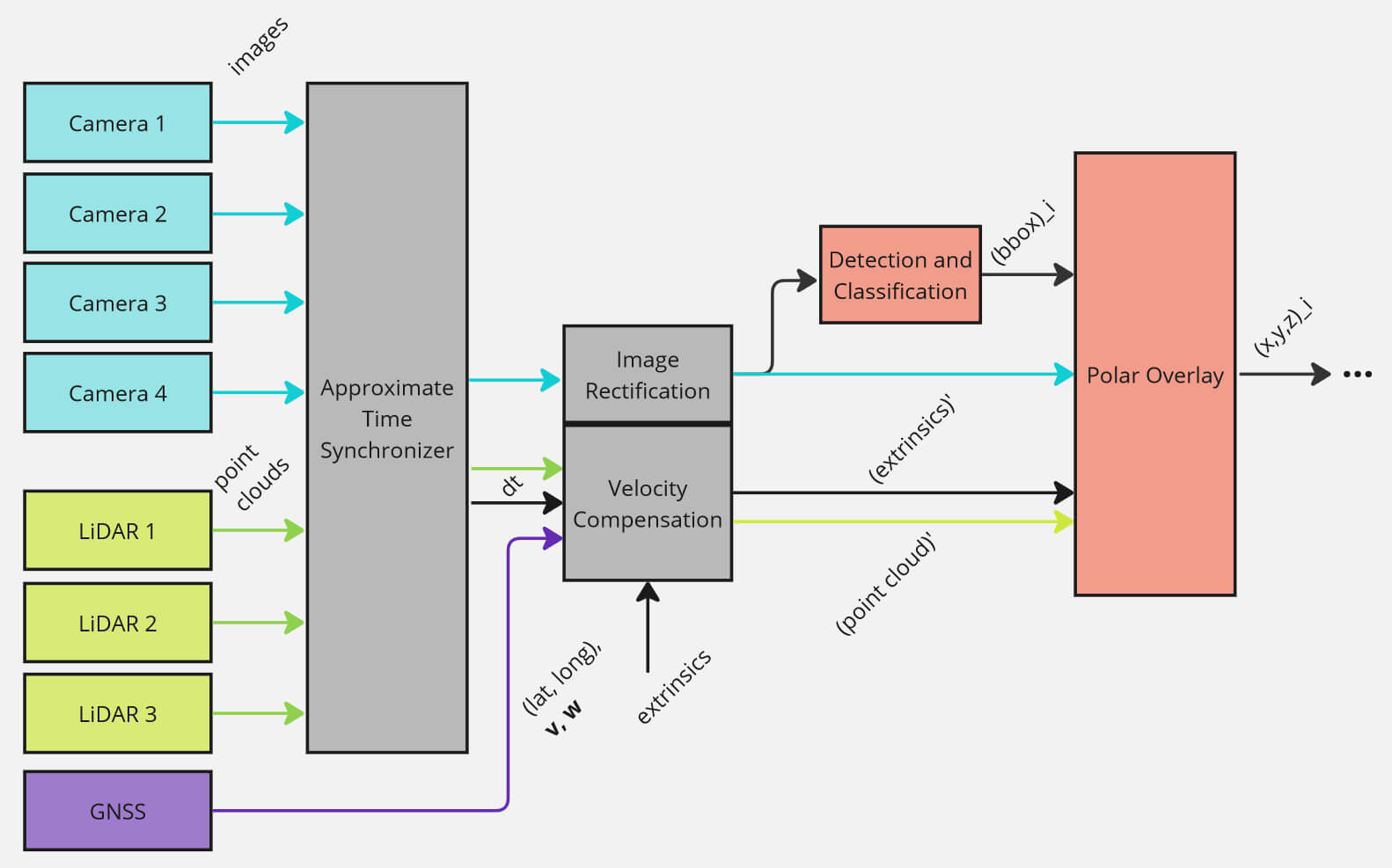

I designed the LiDAR-Camera overlay system for the Year II and III iterations of Queen's AutoDrive. The goal of the system is to obtain the 3D relative position of detected objects. The system takes asynchronously as input 4x images at 15 Hz, 3x point clouds (2x at 15 Hz, 1x at 30 Hz), GNSS data at 80 Hz. The system must not be a bottleneck to the full-stack performance of 10 Hz, so the goal was to achieve 25 Hz.

The previous implementation was based on projecting each point in each point cloud into the image plane. Although ±15 cm lateral error was obtained, this approach is not efficient.

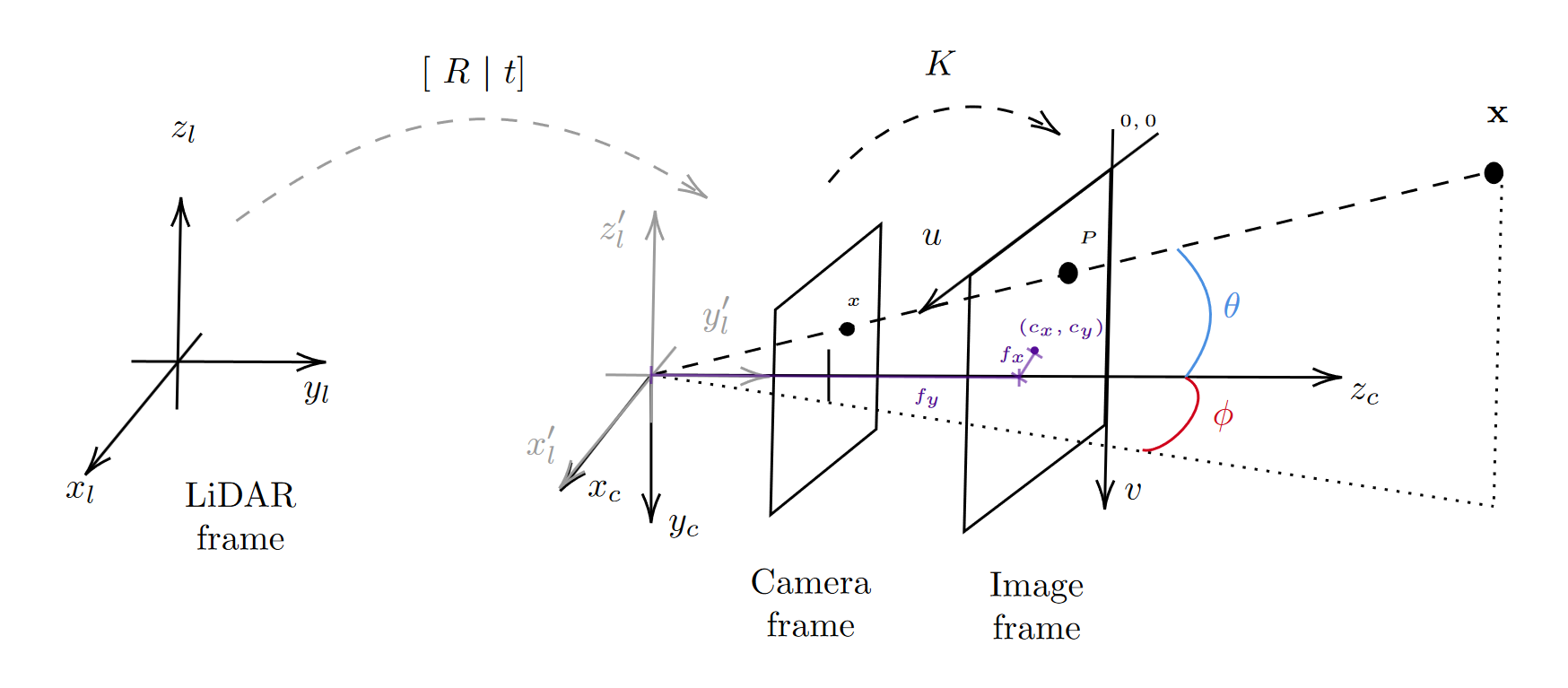

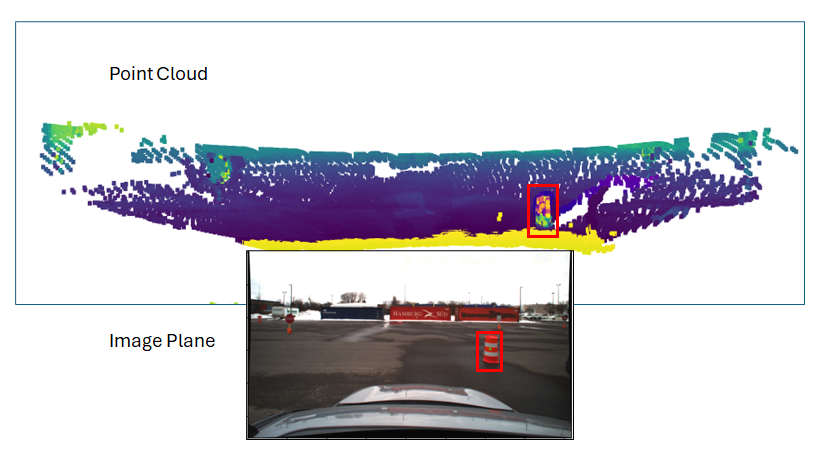

Instead, the novel approach is based on finding a polar relationship between the camera and LiDAR data, reducing the subset of points to be projected, similar to passing a plane in front of a projector. To achieve this, camera instrinsics inform the focal length and center of the image plane (distinct from the image itself). Applying trigonometry, the vector field passing from the lens to the region of interest can be determined as rotations from the x and y axes. Transforming the point cloud to the lens with the extrinsic parameters, the data can be filtered to a region satisfying the polar constraints.